背景

- 生产中的Impala使用问题;

- 目前此套架构生产上的分析师、机器学习工程是、建模工程师达100+,用户量级接近1亿的数据量,增量大的数据集在每天2000w+,总数据表800张+,数据热度统计出每天查询数量(非调用量4000+),所有的复杂查询都基于Impala的此MPP架构展开;

- 大部分相关的查询内存不足问题的解决思路及配置详情;可以直接看文章目录看自己想要的;

错误关键字

Memory limit exceeded: Failed to allocate row batch

ERROR: Memory limit exceeded: Failed to allocate row batch

EXCHANGE_NODE (id=9) could not allocate 8.00 KB without exceeding limit.

Error occurred on backend hostsxx:22000

Memory left in process limit: 17.55 GB

Memory left in query limit: -387.79 KB

Query(d94a6f24497183ad:1f90758400000000): memory limit exceeded. Limit=220.09 MB Reservation=139.19 MB ReservationLimit=176.07 MB OtherMemory=81.28 MB Total=220.47 MB Peak=220.47 MB

Unclaimed reservations: Reservation=17.94 MB OtherMemory=0 Total=17.94 MB Peak=51.94 MB

Fragment d94a6f24497183ad:1f90758400000003: Reservation=0 OtherMemory=0 Total=0 Peak=2.14 MB

HDFS_SCAN_NODE (id=0): Reservation=0 OtherMemory=0 Total=0 Peak=2.01 MB

KrpcDataStreamSender (dst_id=8): Total=0 Peak=128.00 KB

CodeGen: Total=0 Peak=97.00 KB

Fragment d94a6f24497183ad:1f90758400000064: Reservation=0 OtherMemory=35.42 MB Total=35.42 MB Peak=35.42 MB

HASH_JOIN_NODE (id=6): Total=84.25 KB Peak=84.25 KB

Exprs: Total=30.12 KB Peak=30.12 KB

Hash Join Builder (join_node_id=6): Total=30.12 KB Peak=30.12 KB

Hash Join Builder (join_node_id=6) Exprs: Total=30.12 KB Peak=30.12 KB

SELECT_NODE (id=4): Total=0 Peak=0

ANALYTIC_EVAL_NODE (id=3): Total=4.00 KB Peak=4.00 KB

Exprs: Total=4.00 KB Peak=4.00 KB

SORT_NODE (id=2): Total=0 Peak=0

EXCHANGE_NODE (id=9): Reservation=12.38 MB OtherMemory=382.42 KB Total=12.75 MB Peak=12.75 MB

KrpcDeferredRpcs: Total=382.42 KB Peak=382.42 KB

EXCHANGE_NODE (id=10): Reservation=18.09 MB OtherMemory=1.11 MB Total=19.20 MB Peak=19.20 MB

KrpcDeferredRpcs: Total=1.11 MB Peak=1.11 MB

KrpcDataStreamSender (dst_id=11): Total=3.59 KB Peak=3.59 KB

CodeGen: Total=3.35 MB Peak=3.35 MB

Fragment d94a6f24497183ad:1f90758400000032: Reservation=16.00 MB OtherMemory=15.07 MB Total=31.07 MB Peak=73.45 MB

HDFS_SCAN_NODE (id=1): Reservation=16.00 MB OtherMemory=13.64 MB Total=29.64 MB Peak=72.07 MB

Exprs: Total=4.00 KB Peak=8.00 KB

Queued Batches: Total=13.64 MB Peak=13.64 MB

KrpcDataStreamSender (dst_id=9): Total=1.37 MB Peak=1.62 MB

KrpcDataStreamSender (dst_id=9) Exprs: Total=32.00 KB Peak=32.00 KB

CodeGen: Total=7.45 KB Peak=1.30 MB

Fragment d94a6f24497183ad:1f9075840000004b: Reservation=64.75 MB OtherMemory=28.92 MB Total=93.67 MB Peak=93.67 MB

HDFS_SCAN_NODE (id=5): Reservation=64.75 MB OtherMemory=28.56 MB Total=93.31 MB Peak=93.31 MB

Exprs: Total=12.00 KB Peak=12.00 KB

Queued Batches: Total=19.60 MB Peak=19.60 MB

KrpcDataStreamSender (dst_id=10): Total=86.33 KB Peak=86.33 KB

CodeGen: Total=1.71 KB Peak=498.00 KB

WARNING: The following tables are missing relevant table and/or column statistics.

dm_f_02.xxx,xxxx

Memory limit exceeded: Failed to allocate tuple buffer

HDFS_SCAN_NODE (id=1) could not allocate 49.00 KB without exceeding limit.

Error occurred on backend hostxxx:22000 by fragment d94a6f24497183ad:1f90758400000032

Memory left in process limit: 17.55 GB

Memory left in query limit: -387.79 KB

Query(d94a6f24497183ad:1f90758400000000): memory limit exceeded. Limit=220.09 MB Reservation=139.19 MB ReservationLimit=176.07 MB OtherMemory=81.28 MB Total=220.47 MB Peak=220.47 MB

Unclaimed reservations: Reservation=17.94 MB OtherMemory=0 Total=17.94 MB Peak=51.94 MB

Fragment d94a6f24497183ad:1f90758400000003: Reservation=0 OtherMemory=0 Total=0 Peak=2.14 MB

HDFS_SCAN_NODE (id=0): Reservation=0 OtherMemory=0 Total=0 Peak=2.01 MB

KrpcDataStreamSender (dst_id=8): Total=0 Peak=128.00 KB

CodeGen: Total=0 Peak=97.00 KB

Fragment d94a6f24497183ad:1f90758400000064: Reservation=0 OtherMemory=35.42 MB Total=35.42 MB Peak=35.42 MB

HASH_JOIN_NODE (id=6): Total=84.25 KB Peak=84.25 KB

Exprs: Total=30.12 KB Peak=30.12 KB

Hash Join Builder (join_node_id=6): Total=30.12 KB Peak=30.12 KB

Hash Join Builder (join_node_id=6) Exprs: Total=30.12 KB Peak=30.12 KB

SELECT_NODE (id=4): Total=0 Peak=0

ANALYTIC_EVAL_NODE (id=3): Total=4.00 KB Peak=4.00 KB

Exprs: Total=4.00 KB Peak=4.00 KB

SORT_NODE (id=2): Total=0 Peak=0

EXCHANGE_NODE (id=9): Reservation=12.38 MB OtherMemory=382.42 KB Total=12.75 MB Peak=12.75 MB

KrpcDeferredRpcs: Total=382.42 KB Peak=382.42 KB

EXCHANGE_NODE (id=10): Reservation=18.09 MB OtherMemory=1.11 MB Total=19.20 MB Peak=19.20 MB

KrpcDeferredRpcs: Total=1.11 MB Peak=1.11 MB

KrpcDataStreamSender (dst_id=11): Total=3.59 KB Peak=3.59 KB

CodeGen: Total=3.35 MB Peak=3.35 MB

Fragment d94a6f24497183ad:1f90758400000032: Reservation=56.50 MB OtherMemory=16.95 MB Total=73.45 MB Peak=73.45 MB

HDFS_SCAN_NODE (id=1): Reservation=56.50 MB OtherMemory=15.51 MB Total=72.01 MB Peak=72.07 MB

Exprs: Total=8.00 KB Peak=8.00 KB

Queued Batches: Total=7.35 MB Peak=7.52 MB

KrpcDataStreamSender (dst_id=9): Total=1.37 MB Peak=1.62 MB

KrpcDataStreamSender (dst_id=9) Exprs: Total=32.00 KB Peak=32.00 KB

CodeGen: Total=7.45 KB Peak=1.30 MB

Fragment d94a6f24497183ad:1f9075840000004b: Reservation=64.75 MB OtherMemory=28.92 MB Total=93.67 MB Peak=93.67 MB

HDFS_SCAN_NODE (id=5): Reservation=64.75 MB OtherMemory=28.56 MB Total=93.31 MB Peak=93.31 MB

Exprs: Total=12.00 KB Peak=12.00 KB

Queued Batches: Total=19.60 MB Peak=19.60 MB

KrpcDataStreamSender (dst_id=10): Total=86.33 KB Peak=86.33 KB

CodeGen: Total=1.71 KB Peak=498.00 KB (1 of 3 similar)

SUMMARY:

+---------------------------+--------+----------+----------+---------+------------+-----------+---------------+---------------------------------------------+

| Operator | #Hosts | Avg Time | Max Time | #Rows | Est. #Rows | Peak Mem | Est. Peak Mem | Detail |

+---------------------------+--------+----------+----------+---------+------------+-----------+---------------+---------------------------------------------+

| F01:ROOT | 0 | 0ns | 0ns | | | 0 B | 0 B | |

| 07:HASH JOIN | 0 | 0ns | 0ns | 0 | 1 | 0 B | 1.94 MB | INNER JOIN, BROADCAST |

| |--11:EXCHANGE | 0 | 0ns | 0ns | 0 | 0 | 0 B | 116.00 KB | UNPARTITIONED |

| | F03:EXCHANGE SENDER | 25 | 5.91us | 61.24us | | | 3.59 KB | 0 B | |

| | 06:HASH JOIN | 25 | 100.02us | 1.15ms | 0 | 0 | 84.25 KB | 1.94 MB | LEFT OUTER JOIN, BROADCAST |

| | |--10:EXCHANGE | 25 | 0ns | 0ns | 0 | 0 | 19.42 MB | 40.00 KB | BROADCAST |

| | | F04:EXCHANGE SENDER | 25 | 223.44us | 4.39ms | | | 86.33 KB | 0 B | |

| | | 05:SCAN HDFS | 25 | 1.07ms | 11.49ms | 15.73K | 0 | 96.15 MB | 72.00 MB | rds.tablexxx1 |

| | 04:SELECT | 25 | 6.04us | 109.60us | 0 | 0 | 0 B | 0 B | |

| | 03:ANALYTIC | 25 | 6.01us | 63.02us | 0 | 0 | 4.00 KB | 4.00 MB | |

| | 02:SORT | 25 | 31.59us | 418.64us | 0 | 0 | 0 B | 12.00 MB | |

| | 09:EXCHANGE | 25 | 0ns | 0ns | 0 | 0 | 12.97 MB | 52.00 KB | HASH(id_card_no_encrypt,to_date(call_time)) |

| | F02:EXCHANGE SENDER | 25 | 7.01ms | 89.18ms | | | 1.68 MB | 0 B | |

| | 01:SCAN HDFS | 25 | 2.20ms | 24.39ms | 215.30K | 0 | 78.02 MB | 96.00 MB | rds.tablexxx2 |

| 08:EXCHANGE | 0 | 0ns | 0ns | 0 | 1 | 0 B | 16.00 KB | UNPARTITIONED |

| F00:EXCHANGE SENDER | 25 | 13.45us | 130.56us | | | 128.00 KB | 0 B | |

| 00:SCAN HDFS | 25 | 321.89us | 3.68ms | 3 | 1 | 2.02 MB | 32.00 MB | dm_f_02.tablexxx3 |

+---------------------------+--------+----------+----------+---------+------------+-----------+---------------+---------------------------------------------+

剖析

当前集群配置

impala version

statestored version 3.4.0-RELEASE

mem_limit/default_pool_mem_limit

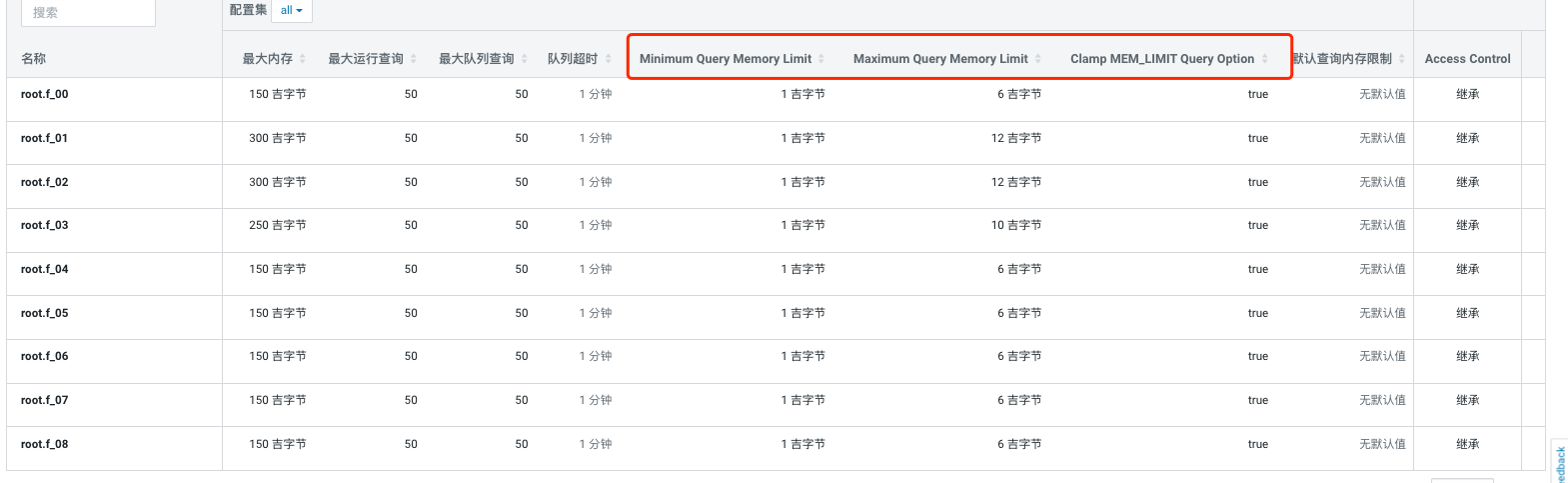

Impala admission control资源池配置

- Minimum Query Memory Limit/Maximum Query Memory Limit

- Clamp MEM_LIMIT Query Option

Impala内存配置剖析

Impala官方文档(全网最全)

- https://impala.apache.org/docs/build/impala-3.4.pdf

- 【可提供附件】

mem_limit

The MEM_LIMIT query option defines the maximum amount of memory a query can allocate on each node. The

total memory that can be used by a query is the MEM_LIMIT times the number of nodes.

MEM_LIMIT查询选项定义查询可以在每个节点上分配的最大内存量。查询可以使用的总内存是MEM_LIMIT乘以节点数。

Minimum Query Memory Limit/Maximum Query Memory Limit

- 总结:Clamp MEM_LIMIT Query Option的设置是当前max mem、min mem配置与mem_limit配置是否互斥的条件;

A user can override Impala’s choice of memory limit by setting the MEM_LIMIT query option. If the Clamp MEM_LIMIT Query Option setting is set to TRUE and the user sets MEM_LIMIT to a value that is outside of the range specified by these two options, then the effective memory limit will be either the minimum or maximum, depending on whether MEM_LIMIT is lower than or higher the range.

For example, assume a resource pool with the following

parameters set:

• Minimum Query Memory Limit = 2GB

• Maximum Query Memory Limit = 10GB

If a user tries to submit a query with the MEM_LIMIT query option set to 14 GB, the following would happen:

• If Clamp MEM_LIMIT Query Option = true,

admission controller would override MEM_LIMIT with 10 GB and attempt admission using that value.

• If Clamp MEM_LIMIT Query Option = false, the

admission controller will retain the MEM_LIMIT of 14 GB set by the user and will attempt admission using the value.

用户可以通过设置MEM_LIMIT查询选项来覆盖Impala选择的内存限制。如果夹MEM_LIMIT查询选项被设置为TRUE和用户MEM_LIMIT设置为指定的范围以外的值这两个选项,那么有效的内存限制将最小值或最大值,这取决于MEM_LIMIT低于或更高的范围。

例如,假设一个资源池有以下内容

参数设置:

•最小查询内存限制= 2GB

•最大查询内存限制= 10GB

如果用户试图提交一个MEM_LIMIT查询选项设置为14gb,会发生以下情况:

•如果Clamp MEM_LIMIT Query Option = true,

入场控制器将用10gb覆盖MEM_LIMIT,并尝试使用该值入场。

•如果Clamp MEM_LIMIT Query Option = false,则

入场控制器将保留用户设置的14 GB的MEM_LIMIT,并尝试使用该值入场。

Clamp MEM_LIMIT Query Option

- 同上

Default Query Memory Limit

- 当前版本不适用

The default memory limit applied to queries executing in this pool when no explicit MEM_LIMIT query option is set. The memory limit chosen determines the amount of memory that Impala Admission control will set aside for this query on each host that the query is running on. The aggregate memory across all of the hosts that the query is running on is counted against the pool’s Max Memory.

This option is deprecated in Impala 3.1 and higher and is replaced by Maximum Query Memory Limit and Minimum Query Memory Limit. Do not set this if either Maximum Query Memory Limit or Minimum Query Memory Limit is set.

当没有设置显式的MEM_LIMIT查询选项时,默认的内存限制应用于在这个池中执行的查询。所选的内存限制决定了Impala Admission control将在运行查询的每个主机上为该查询预留的内存数量。查询运行在所有主机上的聚合内存将计算在池的最大内存中。

该选项在Impala 3.1及更高版本中已弃用,由最大查询内存限制和最小查询内存限制取代。如果设置了“最大查询内存限制”或“最小查询内存限制”,则不需要设置此参数。

解决过程

调整mem_limit

- 查询报错,如置顶的报错信息,内存超出;

- 尝试调节shell:set mem_limit = 10g;

- 执行查询正常;

- 直到mem_limit这个阈值达到230m是最低的不报错的临界点;【查询得到:每个节点的估计内存峰值:220.01MiB】

- 问题来了:根据前面当前集群配置,无论control影不影响,都应该要么单节点20g,要么单节点最大6g;

- 排查到最终是部分查询在Impala的内存估算算法中有异常,将此查询的配置给到了最小查询内存也就是【Minimum Query Memory Limit】这个配置;我这个图是1g,因为我把生产调整为1g,实际上之前配置是1m,因为没看官网和源码的情况下以为会按照区间进行资源分配;遂解决;

建议

-

可以单独开设接口设置每一个查询的mem_limit,这样更好的利用资源;

-

检查集群配置项,多看官网说明,不要有配置问题;

-

这类问题还有一个比较成熟高端的方案,利用计算产生的数据做建模,根据每一个查询中间嫁接一层服务进行mem_limit的赋值;【可以参考:https://blog.csdn.net/qq_18882219/article/details/78649179】

-

目前此套架构生产上的分析师、机器学习工程是、建模工程师达100+,用户量级接近1亿的数据量,数据热度统计出每天查询数量(非调用量4000+),所有的复杂查询都基于Impala的此MPP架构展开;

相关文章

暂无评论...